Architecture, whether applied to software or buildings, is the link between aspirations and technology, both in continuous evolution. Internet and Cloud Computing have triggered a radical change in our civilization, both in terms of personal relationships and in the way of doing business, so it is inevitable to move to new IT architectures.

One of the main differences between traditional monolithic software applications and those designed specifically for the Cloud is the decomposition into loosely coupled services. A service is an autonomous and cohesive set of functionalities exposed through API (Application Programming Interfaces) that cooperates through asynchronous messages (such as email) with other services and with users, even from different companies, according to the principle of maximum hardware and software sharing called “multi-tenancy”. This new architecture ensures maximum scalability and efficiency because at all times each individual service can use the number of machines necessary to ensure the correct service level (SLA) without any resource and energy waste. Moreover, the elastic coupling through message queues allows each service to work at maximum capacity without obstacles from outside and at the same time compensating peaks and valleys in the number of requests generated by users.

Another modern Cloud Architecture trend is distributed computing, applicable at various levels. Slogans as “Intelligent Cloud + Intelligent Edge” and “Edge Computing” represent the implementation at the highest level. For example, in the case of a retail system such as aKite, the Point of Sale is an App that can work without interruptions either connected or disconnected from the Internet. The power of any Tablet or PC is sufficient to manage the normal sales activities locally and collaborate through messages with more complex services resident in the cloud, such as warehouse management or sales forecasts. These architectures, although more complex than a centralized system based on a single db, in addition to better responsiveness to users and quality of service, has also the advantage of greater efficiency and scalability as local processing relieves the Cloud that with same resources can handle many more users. These are some of the reasons for the App success in the mobile world. The latest trend is moving at the edge also complex Artificial Intelligence functions for voice and face recognition. Now let’s move on to illustrate the advantages of distributed computing at the lowest level, among the services inside the Cloud.

Services Orchestration

Initially the Cloud Services were managed by special Virtual Machines updated, activated / deactivated by “orchestration” software. In recent years, the search for greater efficiency has led to Containers and Microservices. The word Containers really wants to recall the physical ones that have brought so many advantages to the transport of goods. Standard dimensions facilitates automated management and the absence of traction and wheels decreases costs and increases the density of goods in long-distance transport. Similarly, software containers exhibit standard interfaces and have a lighter infrastructure than Virtual Machines while Microservices are even more efficient, further reducing the weight of the infrastructure.

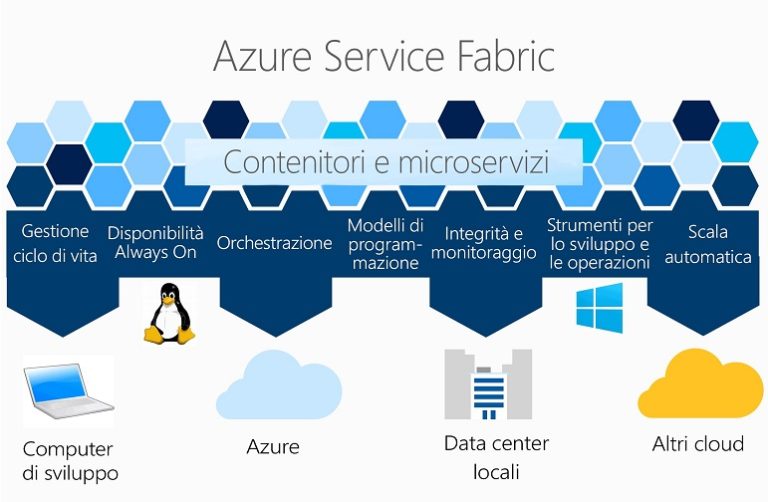

The most popular orchestration software for Containers is Kubernetes developed by Google and now available on all Cloud platforms. The similar Microsoft Azure Service Fabric is deisgned to orchestrate both Containers and Microservices without a single Master, replaced by an intelligence distributed in the different nodes each hosting typically dozens of Containers or hundreds of Microservices. Each node continuously synchronizes with the others and detects any faults or overload in order to increase both the resilience (the ability to withstand failures or anomalous situations) and the scalability (the possibility of using from 1 to thousands of machines in parallel, depending on the workload).

Azure Service Fabric can be used with both Linux and Windows, in any Cloud and even locally.

Other advantages of Cloud native architectures

The specialization and autonomy of the software services has great advantages also from the point of view of development, production and maintenance (in short DevOps) as they can be managed by small groups of people operating in relative autonomy. This allows an evolution in small steps, simple and continuous because each service can be updated and released independently from the others. Adding APIs that expose new features should never negatively affect previous APIs so that other groups can decide for themselves whether and when to use new ones. Adding a completely new service is just as smooth.

On the contrary, in order to evolve traditional monolithic systems, a great deal of coordination between product managers, programmers and operators is required. The release of new versions where a small mistake can lead to whole application blocking is also known as “big-bang”.

ith Cloud-native architectures, the move towards more agile, affordable and reliable software development and management could not be more decisive. As usual, the obstacle is the culture change.

The aKite experience

aKite is Cloud-native since its first release in 2009 and therefore based on a federation of cooperating services in the Cloud and with the Apps in stores. Each service initially required at least one virtual machine, naturally shared according to the principle of multi -tenancy. Recently they have been transformed into MicroServices orchestrated by the Azure Service Fabric. The advantage of greater efficiency and density is perceived, for example, at night when the activity is reduced and less active machines are needed, while the scalability is greater and more easily automated because the technology is the same that Microsoft has been using for years to manage software services (SaaS) even with millions of users.

Next steps

Some functions used occasionally will become serverless. To perform them, any prior activation is not needed, hence the name a bit forced because servers, even if managed by the platform and shared with others, are still necessary. Cost is per seconds needed for each run. In addition to reducing costs due to the lack of dedicated servers that would remain partially unused, “serverless computing” also ensures maximum scalability because there is practically no limit to the number of functions activated in parallel at the same time.

The investment for aKite redesign on the new Cloud paradigms confirm the old saying “Well begun is half done”.

Altri vantaggi delle architetture native Cloud

La specializzazione ed autonomia dei servizi software presentano forti vantaggi anche dal punto di vista dello sviluppo, messa in produzione e manutenzione (in sintesi DevOps) in quanto possono essere gestite da piccoli gruppi di persone operanti in relativa autonomia. Questo consente un’evoluzione a piccoli passi, semplice e continua perché ogni servizio può venire aggiornato e rilasciato indipendentemente dagli altri. L’aggiunta di API che espongono nuove funzionalità non dovrà mai influire negativamente sulle precedenti API in modo che gli altri gruppi possano decidere autonomamente se e quando utilizzare quelle nuove. L’aggiunta di un servizio completamente nuovo è altrettanto agevole.

Al contrario, per far evolvere i tradizionali sistemi monolitici è richiesto un grande lavoro di coordinamento tra responsabili di prodotto, programmatori e operativi, tanto da parlare di “big-bang” per il rilascio di nuove versioni dove un piccolo errore può portare al blocco dell’intera applicazione.

Con le architetture native Cloud il passaggio verso uno sviluppo e una gestione del software più agili, economici ed affidabili non potrebbe essere più deciso. Al solito lo scoglio è il cambio di cultura.

L’esperienza di aKite

aKite è “nativo Cloud” fin dal suo primo rilascio nel 2009 e quindi basato su una federazione di servizi cooperanti fra loro nel Cloud e con le App nei negozi, ognuno dei quali richiedeva inizialmente almeno una macchina virtuale, naturalmente condivisa tra tutti gli utenti secondo il principio della multi-tenancy. Recentemente questi Servizi sono stati trasformati piuttosto facilmente in MicroServizi orchestrati dall’Azure Service Fabric. Il vantaggio della maggiore efficienza e densità si vede, ad esempio, di notte quando l’attività è ridotta e sono necessarie meno macchine attive, mentre la scalabilità è maggiore e più facilmente automatizzabile perché la tecnologia è la stessa che Microsoft utilizza da anni per gestire servizi software (SaaS) anche con milioni di utenti.

Prossimi passi

Alcune funzioni utilizzate sporadicamente saranno rese “serverless”. Per eseguirle non occorre alcuna attivazione preventiva, da cui la denominazione “senza server” per la verità un po’ forzata perché alcuni server, anche se gestiti dalla piattaforma e condivisi, sono comunque necessari. Si paga solo in base ai secondi necessari per ogni esecuzione. Oltre alla riduzione dei costi per la mancanza di server dedicati che rimarrebbero parzialmente inutilizzati, il serverless computing assicura anche la massima scalabilità in quanto non esiste praticamente limite al numero di funzioni attivabili contemporaneamente.

I notevoli investimenti nella riprogettazione di aKite sui nuovi paradigmi del Cloud, avvenuta quasi dieci anni fa, confermano il vecchio detto “Chi ben inizia è già a metà dell’opera”.

Archivio

35010 Vigonza (PD)

P.IVA/C.F: 02110950264

REA 458897 Cap.soc. 50.000€

Software

Blog

© Copyright 2023 aKite srl – Privacy policy | Cookie policy